Google: Android phones listen to my AI and do this

One month ahead of Apple, Google demonstrated the possibilities of AI+mobile phones in its new Pixel phone.

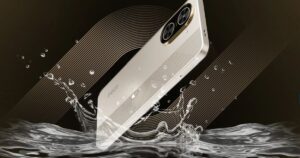

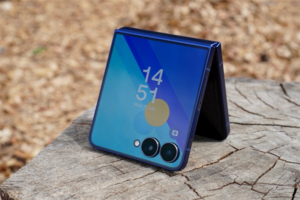

On August 13, local time, Google released new hardware products including Pixel 9, Pixel 9 Pro, the second-generation folding screen Pixel 9 Fold, smart watches, headphones, etc. Compared with hardware, people are more concerned about how Google, as the manager of the Android system, will use edge AI in Android phones at a time when AI+ phones are so popular .

Almost 10 months ago, Google launched its first generation of AI mobile phones. Now, less than a year later, the giant has once again updated the Gemini, Android and Pixel product portfolio. This time, the main feature is the “what competitors have, I have too” model.

Since OpenAI launched the ChatGPT voice mode and the AI function of Apple’s next-generation iPhone was exposed, Google has been unwilling to lag behind its peers in doing what they can do, including AI voice conversations, searching for information in screenshots, etc. At the same time, Google is also further integrating AI into its own application ecosystem.

Google must compete with Apple to become the leader in AI mobile phones.

In response to OpenAI, Gemini Live goes live

Gemini is the cornerstone of Google’s various AI products and is also the default assistant on Google’s Pixel 9 series phones. What upgrades Gemini has when it is integrated into the phone was a major focus of the launch conference.

Gemini on Google Pixel phones can be summoned by pressing the power button. According to Google executives, starting today, users can call up Gemini’s overlay on top of the apps they are using and ask questions about what’s on the screen. For example, users can ask questions about the YouTube video they are watching, and users can also generate images directly from Gemini’s overlay and drag and drop them into apps such as Gmail and Google Messages.

Google also plans to connect Gemini to more applications in the “coming weeks”, including calendars, Keep, and YouTube Music. Specific functional uses include asking Gemini to “make a playlist of songs that remind me of the late 90s.” “Take a photo of a concert flyer and ask Gemini to check if it’s free that day – even set a reminder to buy tickets.” “Let Gemini find a recipe in your Gmail and ask it to add the ingredients to the shopping list in Keep.”

Gemini uses screenshots to extract information and interact with more applications | Image source: Google

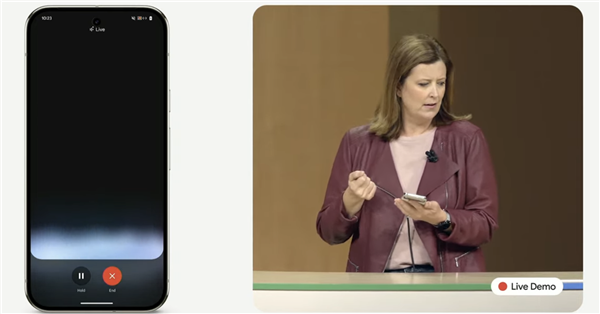

In addition to these integrated experiences, Google has also officially launched Gemini Live, which can be said to be Google’s response to OpenAI’s GPT-4o advanced speech model . This feature was previously exposed at Google’s 2024 I/O developer conference and officially launched today.

Users can use their mobile phones to have voice conversations with Google’s generative AI chatbot Gemini, interrupting them midway to ask follow-up questions, and the conversation can be paused and resumed at any time. Some specific examples of uses include letting Gemini Live accompany you to prepare for job interviews, practice speeches, and chat about topics of concern.

Gemini Live is clearly targeting GPT-4o’s voice interaction|Image source: Google

So, does Gemini Live have an advantage over ChatGPT’s voice mode?

The generative AI model architectures that power Live — Gemini 1.5 Pro and Gemini 1.5 Flash — are said to have longer “context windows” than average, meaning they can process and reason about large amounts of data before generating a response, theoretically enabling conversations lasting hours.

Gemini Live can also be used hands-free, allowing users to continue voice conversations when the app is in the background or the phone is locked, with 10 new voices to choose from.

But it is worth noting that Gemini Live has not yet launched the “multimodal input” function, which is said to be launched “later this year . “

A few months ago, Google released a pre-recorded video showing how Gemini Live could recognize the user’s surroundings through photos and videos captured by the phone’s camera and respond, such as pointing out a part of a broken bicycle or explaining the function of a piece of code on a computer screen, but none of these were actually demonstrated on site.

Moreover, Gemini Live is available for Gemini Advanced subscribers on Android phones and is not free. It is currently only available in English, but Google said it will expand to more languages in the “coming weeks” and to iOS through an app.

Google executives livestreamed Gemini Live|Image source: Google

Google’s executives working on the Gemini experience and Google Assistant said “Google is in the early stages of exploring all the ways our AI-powered Assistant can be useful — and just like the Pixel phones, Gemini will only get better.”

In addition, on the issue of privacy, executives from Google’s Android ecosystem said that Gemini supports hundreds of phone models from dozens of device manufacturers, and when processing user data, the data will not leave the phone.

“Gemini can help you create a daily workout plan based on an email from your personal trainer, or write a job profile using your resume in Google Drive. Only Gemini can do all of this in a secure, integrated way, without handing over your data to a third-party AI provider you may not know or trust,” said Sameer Samat, president of Android Ecosystem at Google.

“Because Android is the first mobile operating system with a large-scale on-device multimodal AI model — called Gemini Nano — your data never leaves your phone when handling some of the most sensitive use cases.”

AI is further integrated into Android

Google’s biggest advantage in promoting AI phones is undoubtedly its own variety of application tools and the Android ecosystem. Google now has billions of Android users, and there is obviously more room for it to play than a chatbot application.

Last year, as the first AI-centric smartphone, Google’s Pixel 8 series brought a variety of AI features. For example, users can remove, move or edit individual elements in photos, move expressions between photos to get the best composite photos, and search based on a screenshot or a “circled part” on the screen.

All of these features first appeared on the Pixel 8 series and were subsequently promoted to a certain extent throughout the Android ecosystem.

Google’s “circle search” feature actually first appeared on Samsung’s Galaxy AI phone. As Google’s Android ecosystem partner, Samsung’s phones have also added many similar AI features. The aforementioned AI assistant Gemini also appears in Samsung’s Galaxy Z Fold6, Motorola Razr+ and other phones.

At the same time, other manufacturers have also announced the launch of their own mobile AI. In the past few months, the most watched one is Apple. The same direction and concept is to integrate its own application ecosystem.

In the past year, “AI mobile phone” has become the core perspective of the market to view mobile phones, not just Google phones. How to use AI to impress people again is a challenge for Google. At present, similar to the launch of the first generation of AI mobile phone Pixel 8, Google’s most output is still various AI function gadgets.

At this year’s Made By Google event, Google highlighted new AI features including :

“Add Me” allows even the person taking the photo to include themselves in the photo;

“Pixel Studio,” an AI image generator that’s very similar to Apple’s upcoming Image Playground app;

“Pixel Screenshots,” which scans the screenshots in a user’s gallery and turns them into an easily searchable database;

「Call Notes」 can save the information summary in the call log. After activating this feature, everyone on the call will receive a notification.

If you want to implement the “Add Me” function, the photographer first takes a photo without himself, and then another person takes the photo. Pixel will merge the two photos to ensure that everyone is in the same photo, without having to ask strangers to help take photos.

Group photo function|Image source: Google

Another major selling point of Google’s Pixel 9 series is the AI camera, which it calls “the world’s first AI-driven camera.” Google executives also said that “Pixel is the first phone to use night vision in photos and videos, and now it is also the first phone to capture magnificent panoramic landscapes and cityscapes in low-light environments.” At the press conference, Google executives also compared the photos taken by the Pixel 9 Pro XL with those of Apple’s iPhone 15 Pro Max.

Google phone vs. Apple phone night photography effects | Source: Google

The foldable Pixel 9 Pro Fold with a large screen also has a “Made You Look” feature that can be used to attract users’ attention and make them smile at the camera. When using this feature, unfold the phone and a striking visual animation will play on one side of the outer screen, such as a bright yellow chicken or other funny animations.

Screen features that attract user attention | Image source: Google

After taking a photo, there are also tools for retouching. Google Photos’ Magic Editor has introduced some new features this year. For example, the “Auto Frame” feature is used to correct the angle of tilted photos, while using generative AI to fill in the blanks around the subject to form a wider field of view. There is also the “Reimagine” feature, which allows you to describe the desired effect in a text box, and then use generative AI to retouch the photo, such as turning grass on the ground into wild flowers, adding a hot air balloon to a certain part of the sky, etc.

In addition, Google is following in Apple’s footsteps and launching a “Satellite SOS” feature for use in emergencies, which allows users to contact emergency responders and share location information when there is no cellular service. According to Google executives, the Pixel 9 series will be “the first Android phones to be able to use Satellite SOS.”

Satellite SOS function | Image source: Google

From a functional point of view, the combination of Pixel 9+ Gemini is not too far ahead of the current domestic Android manufacturers in terms of AI. But it should be noted that unlike Apple, Google has its own system and terminals, as well as large models and cloud computing. It is the only company that has truly completed the closed loop of “software, hardware, core and cloud” .

Once there is a breakthrough in terminal AI, Google, which is fully prepared, has a much greater chance of becoming “great again” than its competitors.

Perhaps, what Google lacks is just a greater ambition.

Kazam is Focused on creating and reporting timely content in technology with a special focus on mobile phone technology. Kazam reports, analyzes, and reviews recent trends, news and rumors in mobile phone technology and provides the best possible insights to enhance your experience and knowledge.